Introduction

Financial Times recently reported that fraudsters have employed a deepfake bot in order to deceive an employee of a Hong Kong company into transferring a substantial sum of $25 million. It is worth noting that this is not the inaugural instance of such fraudulent activity. However, the distinguishing and disconcerting aspect of this particular case lies in its unparalleled magnitude, alongside the employment of artificial intelligence to fabricate a fraudulent imitation of the company’s esteemed chief financial officer. This is one of many recent stories, which is becoming a more serious cause for alarm.

The utilisation of Artificial Intelligence (AI) can pose as a potential threat, but it also presents a valuable opportunity for financial institutions. With its capabilities, AI can effectively analyse extensive volumes of data, enabling these institutions to efficiently manage risks and even participate in trading activities within capital markets.

The European Commission is taking a significant step towards regulating AI by proposing the first-ever legal framework on AI. This progressive move not only acknowledges the risks associated with AI but also positions Europe as a global leader in governing this technology.

The EU AI Act will have a wide-ranging impact, encompassing both AI system providers and users from the EU and beyond, as long as their outputs are utilised within the borders of the European Union.

Towards Responsible and Trustworthy AI

The proposal, referred to as the EU AI Act, aims to ensure that AI in Europe is developed, deployed, and used in an ethical and responsible manner. It focuses on upholding fundamental rights, promoting transparency, accountability, and tackling potential biases in AI systems. The act emphasises human oversight and safeguards against AI systems that may pose risks to safety, privacy, or legal rights.

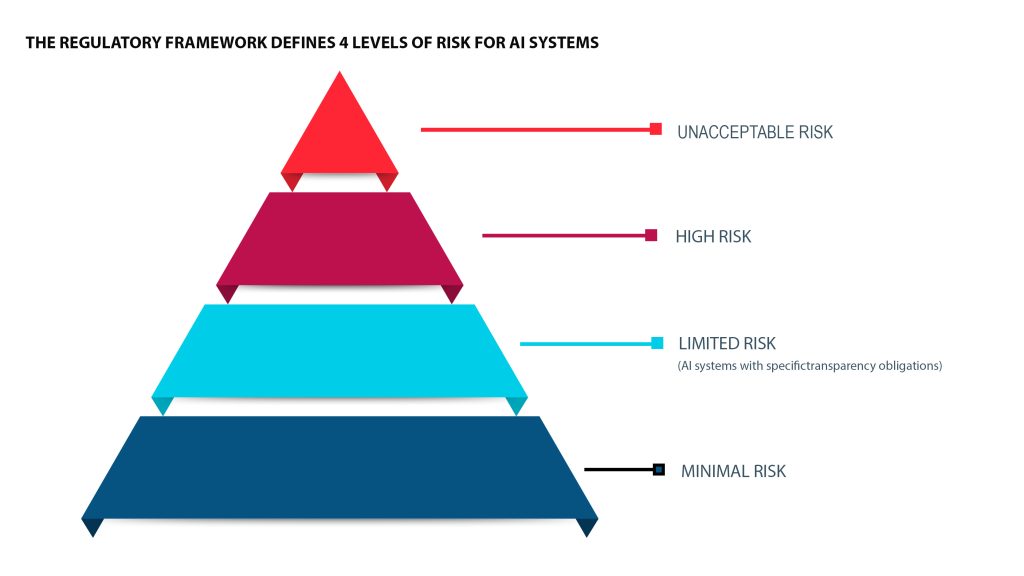

The Artificial Intelligence Act of the EU embraces a risk-based and horizontal methodology in regulating AI and classifies AI systems into four different risk categories: 1

The classification depends on the potential harm AI systems may cause, such as endangering life or violating rights. The highest-risk AI systems, like those used in critical infrastructure, will be subject to stricter regulatory requirements and conformity assessments before deployment.

The implementation of the EU AI Act signals considerable ramifications for anti-money laundering (AML) and fraud prevention operations in the banking industry. The European Commission is currently considering the classification of AI usage in financial services as “high risk” according to the provisions set forth in the EU AI Act.

To meet the requirements set forth in the AI Act pertaining to high-risk AI systems, it is imperative to establish a comprehensive framework that encompasses risk management, data governance, documentation, and transparency. 2

The EU AI Act is guided by two key objectives: promoting the adoption of artificial intelligence (AI) and addressing the risks associated with its technology. It lays out the European vision for trustworthy AI, encompassing a comprehensive framework that aims to safeguard users and society at large. 3

- Protection of European citizens against AI misuse

- Guaranteeing transparency and trust

- Catalysing innovation without sidelining safety and privacy

The EU AI Act imposes significant penalties for violations pertaining to prohibited systems, which may result in fines of up to €35,000,00 or 7% of the company’s worldwide annual turnover for the preceding financial year, whichever amount is higher. 4

The Impact of the EU AI Act in the Financial Services Sector

The AI Act is poised to become a universal standard in regulating the ethical use of AI, in recognition of the far-reaching impact of the General Data Protection Regulation (GDPR) worldwide. This groundbreaking legislation transcends geographical boundaries and applies to all domains, including the financial sector.

For financial institutions, which heavily rely on AI for crucial functions such as fraud detection, algorithmic trading, risk analysis, and improving customer experiences, the AI Act presents both opportunities and challenges.

Financial institutions must adapt their AI systems to align with the Act’s guidelines, particularly when it comes to high-risk applications like credit scoring. The AI Act emphasises the importance of transparent and interpretable AI models, and mandates the use of unbiased, high-quality data. 4

The AI Act will have a significant impact on the financial sector through various channels. 5

- One noteworthy effect will be on the banking industry, specifically concerning AI-driven credit evaluations and assessments of risk and pricing in life and health insurance, both of which are considered high-risk applications of AI technology.

- Moreover, the Act will enforce new regulations on what are referred to as general purpose AI systems, encompassing expansive language models and generative AI applications.

- Additionally, the European data strategy, encompassing legislative measures such as the Data Act and the Data Governance Act, will also be influenced by the AI Act.

Considering the widespread usage of AI in various applications such as claims management, anti-money laundering, or fraud detection within the financial services sector, it is imperative for regulators to evaluate the adequacy of existing regulations and identify areas that may require additional guidance for specific use cases. This evaluation must take into account factors such as proportionality, fairness, explainability, and accountability.

Conclusion

On March 14, 2024, the Parliament officially endorsed the Artificial Intelligence Act, which guarantees the preservation of safety and adherence to fundamental rights, all the while stimulating innovative advancements.

The regulation is currently undergoing a final review by legal experts and linguists, and it is expected to be formally adopted prior to the conclusion of the legislative session, following the corrigendum procedure. Additionally, the law must receive formal endorsement from the Council.

Once published in the official Journal, this regulation will come into effect after twenty days and will be fully enforceable twenty-four months thereafter. However, certain measures will have different timelines for implementation. Prohibitions on restricted practices will be applicable six months after the commencement date, codes of practice will take effect nine months following the commencement date, and general-purpose AI rules, including governance, will be enforced twelve months after the commencement date. Finally, obligations pertaining to high-risk systems will come into force thirty-six months after the commencement date.

Sources:

- Four different Risk Categories – https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai#:~:text=The%20AI%20act%20aims%20to,%2Dsized%20enterprises%20(SMEs).

- The implimentation of the AI Act: https://fintech.global/2024/01/30/navigating-the-eu-ai-act-a-strategic-guide-for-aml-and-fraud-prevention-in-banking/

- Purpose of EU AI Act: https://www.consultancy.eu/news/9392/european-ai-act-implications-for-the-financial-services-industry

- Penalties of EU AI Act: https://www.holisticai.com/blog/penalties-of-the-eu-ai-act#:~:text=The%20heftiest%20fines%20are%20given,annual%20worldwide%20turnover%20for%20companies.

- Impact on Financial Services Sector: https://www.consultancy.eu/news/9392/european-ai-act-implications-for-the-financial-services-industry

- Next steps on the EU AI Act: https://www.fintechfutures.com/2024/02/how-the-eu-ai-act-could-transform-financial-services/

Contact us

Stuart Thomson

Partner,

Aspect Advisory

![]()